#cognitive offloading

Explore tagged Tumblr posts

Text

Smart assistants like Siri and Alexa are convenient—but are they making us mentally lazy? Dive into the psychology of convenience, cognitive shortcuts, and the future of thinking in a world ruled by tech. #CriticalThinking #SmartTech #AIandHumanBehavior #DigitalPsychology #CognitiveScience

#AI and human thinking#Alexa#cognitive offloading#cognitive psychology#critical thinking#digital convenience#mental habits#passive learning#Siri#smart technology#tech and cognition#voice assistants

0 notes

Text

INTELLIGENT MACHINE AND STUPID PEOPLE --- RETROGRESSIVE EVOLUTION

The most important invention in your lifetime is… Flynn Effect after James Flynn who popularized the idea in 1984 that — For many years, people were getting smarter. But, new studies suggest that this effect is waning. In other words, ” Reverse Flynn Effect” is rearing its ugly head. The invention of our times which has shaken the very firm foundations of the idea of using the brain for the…

View On WordPress

#AI#cognitive offloading#dailyprompt#dailyprompt-1840#dumb people#Flynn Effect#intelligent machine#machine vs. man#retrogressive evolution#Reverse Flynn Effect#stupid people#unused brains

0 notes

Text

DONE WITH THE NEXT CHAPTER... that was a lot of words for my exhausted brain. however i'm kind of attached to the current summary for chapter five so i'll hold onto six for a few days before i post it i think... maybe post another fic in between

#this will probably be easier than imagined because i have another paper and another two midterms next week 🫠#posting this and then going to sleep like i've shed all my responsibilities by cognitively offloading them onto the internet 👍#“sunny why did you write all day when you're sick” listen i do not control the renjing

5 notes

·

View notes

Text

Noah fence bc I *do* agree with several of the things you're saying but also the way you present some of these things (such as decreased character creation complexity or Rulings over Rules) as unique to 5e as opposed to other RPGs really makes it look like your *only* point of reference for what RPGs that aren't 5e are like is Pathfinder.

Not an original observation but it's legitimately insane how common of a story "I don't want to run d&d 5e anymore but I'm stuck running it because it's the only thing my group will let me GM for them" is. It's fucking everywhere in any non-D&D focused ttrpg space.

Like. I think "the person who does like 90% of the work to make the game actually happen gets to pick what game we play" should be the bare minimum of courtesy towards a GM.

#like specially the character creation complexity#d&d is only really uncomplicated in that regard if the only thing you have to compare it to is pathfinder or rolemaster or w/e#like I do agree that 5e allows players to offload the cognitive load of the rules to the gm in a way other games don't#and that's why people gravitate to it#but it's more of an issue of play culture than an issue of the rules being simpler or more freeform than most other rpgs

3K notes

·

View notes

Text

From Rebecca Solnit:

When you outsource thinking, your brain goes on vacation. "EEG analysis presented robust evidence that LLM, Search Engine and Brain-only groups had significantly different neural connectivity patterns, reflecting divergent cognitive strategies. Brain connectivity systematically scaled down with the amount of external support: the Brain‑only group exhibited the strongest, widest‑ranging networks, Search Engine group showed intermediate engagement, and LLM assistance elicited the weakest overall coupling."

https://arxiv.org/pdf/2506.08872

But also here's a fantastic essay on the subject: "Now, in the age of the internet—when the Library of Alexandria could fit on a medium-sized USB stick and the collected wisdom of humanity is available with a click—we’re engaged in a rather large, depressingly inept social experiment of downloading endless knowledge while offloading intelligence to machines. (Look around to see how it’s going). That’s why convincing students that intelligence is a skill they must cultivate through hard work—no shortcuts—has become one of the core functions of education."

https://www.forkingpaths.co/p/the-death-of-the-student-essayand

74 notes

·

View notes

Note

Have you made a DTW about Cognitive Load and Offloading in the context of Magic? You've made a great Psychology episode but I'm wondering how much could be said about things that add to a player's decision-making and how they offload new information. What have you learned making mechanics like The Initiative or Max Speed that impose new game requirements on players? Why is Arcane Denial's secondary effect of drawing cards forgotten so often? How do you evaluate complexity during vision?

It's a good topic, just one that will probably require a lot of prep work. I'll add it to the "short list".

72 notes

·

View notes

Note

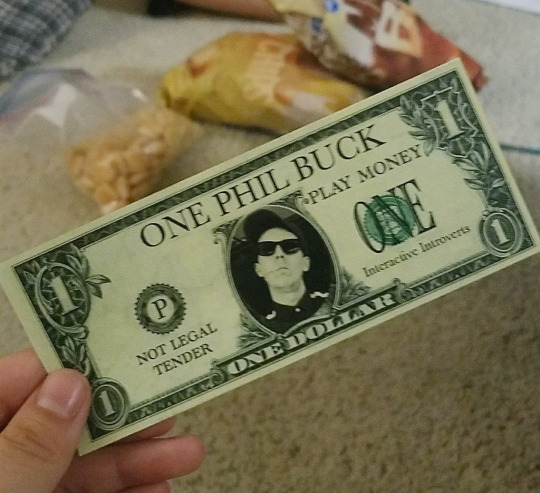

UPDATE!!!

Hello I was hoping you may help with a dnp query if you would be so kind . A queery, even. I recently recalled that Phil had another money gun during ii ,, did the money have any special design like with the phounds during tit?

Danke

oh my god this is actually killing me does anyone have a photo of the phil bucks from ii!!!! yeah they had his face on them but somehow i can't find a pic on any of the ii archives 😭

#okay so the ii archive said the phil bucks started in phoenix so i tried a bunch of date keyword searches starting august 7th#except that didn't work bc no one was saying “i got a phil buck” BUT THEN#i remembered phannies love a spoiler tag and a new prop in the show would be a spoiler#so i searched iispoilers and BAM#GOD WHAT A RUSH#this is why we say fuck ai and fuck cognitive offloading and fuck effort aversion GET INTO THE RESEARCH GRIND

116 notes

·

View notes

Text

"it's ridiculous to say it's easier to cheat academically now" every single student in the first world has a cheating machine in their pocket at all times everywhere they go that can tell them exactly what to think, say and feel and it completely and utterly replaces every part of the critical thinking process in every single way if you want it to. the basic entry point for cheating used to be, at minimum, having the money or the social capital to convince someone to help you cheat. if you cannot reconcile this, then my unfounded assumption based solely on vibes is that you are probably using or have used AI for academic purposes and are defending it solely on the basis that you feel called out for it. it's crazy that you'll get put on a cross on here for using AI for art but academically, the excuses are everywhere.

people have been cheating academically since the beginning of academics. the difficulty to do so, however, has absolutely steeply decreased. not only that, but why are we totally disregarding the fact that people who relied upon cheating the way that a huge number of students rely on ChatGPT today were already seeing reduced academic performance? like... we've known for years that regularly cheating and cognitive offloading the old way leads to worse academic outcomes. so why would the analysis be less critical when a far, far larger percentage of the student population is now doing so and using a tool that is far more accessible and efficient to make it possible?

if you want to make this a socioeconomic issue, which i agree it is, then fine. be a socialist. join an organization and advocate for free education, health care and housing. it is also absolutely an issue accelerated at break neck speed by COVID. but you have to start by acknowledging there is a problem. you need to put the AI down, even if it puts you at a disadvantage. at the very bare minimum, you need to stop using it to write and summarize for you. it will be more difficult, it will take more time, you will fail, it will be hard. but you need to stop letting the machine that is wrong all the time and easily manipulated by your own biases and the biases of it's creators think on your behalf.

53 notes

·

View notes

Text

I admit this is mostly based on how I would redesign a human body to run on batteries, but I did try to keep certain things I remembered in mind. (e.g. Murderbot is obviously bionic, it has to pretend to eat, it can't visually distinguish a combat-grade SecUnit at a glance.)

Additional senses are stored in the face. It's conveniently close to the brain (which is why humans put augments there too), and there are a lot of spare nerve endings there since a SecUnit doesn't need nearly so many in its tongue.

Murderbot's ears (and voice, partially) are electronic, which requires another organ to provide a sense of balance - hence, gyroscopes. Mounting the gyroscopes close to the centre of mass would allow SecUnits to reorient themselves in microgravity without having to push off against another object. That would be one of the recalibrations necessary after its height adjustment.

Murderbot explicitly has to section off part of its lung in order to pretend to eat, which implies a diaphragm as well as a circulatory system (which is an elegant solution for many other things), but its organic components need nutrients for normal function. I'm not sure if it's been said where those come from but I'm assuming some kind of non-digestive injection, which frees up a lot of space for more computers. Murderbot's organic memory seems to be closely linked to its cognition, so it'll probably still die from decapitation, but another processor closer to the physical core would offload subconscious background programmes and greatly improve reaction time by physically shortening the neural pathways. Murderbot also uses it to store its massive media library.

It's hard to improve on a complex appendage like an opposable thumb without making it obviously artificial. The other fingers are less obvious - independently-articulating joints are useful for things like delicate technical work or dismantling firearms one-handed, but even classical musicians rarely do more than have their tendons separated.

SecUnit forearms have only one 'bone', which looks slightly uncanny when twisted but isn't especially distracting unless it draws attention to them by using its energy weapons. The number of humans who have prosthetic forearms and combat enhancements is vanishingly small. Who loses a limb and then plans to keep putting themselves in violent situations anyway? That's what SecUnits are for. (The weapons are mounted in a way to minimise the risk of putting fingers in the path of the beam, as well as cushion against impacts that might otherwise damage the rest of the limb.)

#my art#pencil drawing#fanart#character art#the murderbot diaries#speculative engineering#science fiction art#queer artist#nonbinary artist#transhumanism

38 notes

·

View notes

Text

I saw a post the other day calling criticism of generative AI a moral panic, and while I do think many proprietary AI technologies are being used in deeply unethical ways, I think there is a substantial body of reporting and research on the real-world impacts of the AI boom that would trouble the comparison to a moral panic: while there *are* older cultural fears tied to negative reactions to the perceived newness of AI, many of those warnings are Luddite with a capital L - that is, they're part of a tradition of materialist critique focused on the way the technology is being deployed in the political economy. So (1) starting with the acknowledgement that a variety of machine-learning technologies were being used by researchers before the current "AI" hype cycle, and that there's evidence for the benefit of targeted use of AI techs in settings where they can be used by trained readers - say, spotting patterns in radiology scans - and (2) setting aside the fact that current proprietary LLMs in particular are largely bullshit machines, in that they confidently generate errors, incorrect citations, and falsehoods in ways humans may be less likely to detect than conventional disinformation, and (3) setting aside as well the potential impact of frequent offloading on human cognition and of widespread AI slop on our understanding of human creativity...

What are some of the material effects of the "AI" boom?

Guzzling water and electricity

The data centers needed to support AI technologies require large quantities of water to cool the processors. A to-be-released paper from the University of California Riverside and the University of Texas Arlington finds, for example, that "ChatGPT needs to 'drink' [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20-50 questions and answers." Many of these data centers pull water from already water-stressed areas, and the processing needs of big tech companies are expanding rapidly. Microsoft alone increased its water consumption from 4,196,461 cubic meters in 2020 to 7,843,744 cubic meters in 2023. AI applications are also 100 to 1,000 times more computationally intensive than regular search functions, and as a result the electricity needs of data centers are overwhelming local power grids, and many tech giants are abandoning or delaying their plans to become carbon neutral. Google’s greenhouse gas emissions alone have increased at least 48% since 2019. And a recent analysis from The Guardian suggests the actual AI-related increase in resource use by big tech companies may be up to 662%, or 7.62 times, higher than they've officially reported.

Exploiting labor to create its datasets

Like so many other forms of "automation," generative AI technologies actually require loads of human labor to do things like tag millions of images to train computer vision for ImageNet and to filter the texts used to train LLMs to make them less racist, sexist, and homophobic. This work is deeply casualized, underpaid, and often psychologically harmful. It profits from and re-entrenches a stratified global labor market: many of the data workers used to maintain training sets are from the Global South, and one of the platforms used to buy their work is literally called the Mechanical Turk, owned by Amazon.

From an open letter written by content moderators and AI workers in Kenya to Biden: "US Big Tech companies are systemically abusing and exploiting African workers. In Kenya, these US companies are undermining the local labor laws, the country’s justice system and violating international labor standards. Our working conditions amount to modern day slavery."

Deskilling labor and demoralizing workers

The companies, hospitals, production studios, and academic institutions that have signed contracts with providers of proprietary AI have used those technologies to erode labor protections and worsen working conditions for their employees. Even when AI is not used directly to replace human workers, it is deployed as a tool for disciplining labor by deskilling the work humans perform: in other words, employers use AI tech to reduce the value of human labor (labor like grading student papers, providing customer service, consulting with patients, etc.) in order to enable the automation of previously skilled tasks. Deskilling makes it easier for companies and institutions to casualize and gigify what were previously more secure positions. It reduces pay and bargaining power for workers, forcing them into new gigs as adjuncts for its own technologies.

I can't say anything better than Tressie McMillan Cottom, so let me quote her recent piece at length: "A.I. may be a mid technology with limited use cases to justify its financial and environmental costs. But it is a stellar tool for demoralizing workers who can, in the blink of a digital eye, be categorized as waste. Whatever A.I. has the potential to become, in this political environment it is most powerful when it is aimed at demoralizing workers. This sort of mid tech would, in a perfect world, go the way of classroom TVs and MOOCs. It would find its niche, mildly reshape the way white-collar workers work and Americans would mostly forget about its promise to transform our lives. But we now live in a world where political might makes right. DOGE’s monthslong infomercial for A.I. reveals the difference that power can make to a mid technology. It does not have to be transformative to change how we live and work. In the wrong hands, mid tech is an antilabor hammer."

Enclosing knowledge production and destroying open access

OpenAI started as a non-profit, but it has now become one of the most aggressive for-profit companies in Silicon Valley. Alongside the new proprietary AIs developed by Google, Microsoft, Amazon, Meta, X, etc., OpenAI is extracting personal data and scraping copyrighted works to amass the data it needs to train their bots - even offering one-time payouts to authors to buy the rights to frack their work for AI grist - and then (or so they tell investors) they plan to sell the products back at a profit. As many critics have pointed out, proprietary AI thus works on a model of political economy similar to the 15th-19th-century capitalist project of enclosing what was formerly "the commons," or public land, to turn it into private property for the bourgeois class, who then owned the means of agricultural and industrial production. "Open"AI is built on and requires access to collective knowledge and public archives to run, but its promise to investors (the one they use to attract capital) is that it will enclose the profits generated from that knowledge for private gain.

AI companies hungry for good data to train their Large Language Models (LLMs) have also unleashed a new wave of bots that are stretching the digital infrastructure of open-access sites like Wikipedia, Project Gutenberg, and Internet Archive past capacity. As Eric Hellman writes in a recent blog post, these bots "use as many connections as you have room for. If you add capacity, they just ramp up their requests." In the process of scraping the intellectual commons, they're also trampling and trashing its benefits for truly public use.

Enriching tech oligarchs and fueling military imperialism

The names of many of the people and groups who get richer by generating speculative buzz for generative AI - Elon Musk, Mark Zuckerberg, Sam Altman, Larry Ellison - are familiar to the public because those people are currently using their wealth to purchase political influence and to win access to public resources. And it's looking increasingly likely that this political interference is motivated by the probability that the AI hype is a bubble - that the tech can never be made profitable or useful - and that tech oligarchs are hoping to keep it afloat as a speculation scheme through an infusion of public money - a.k.a. an AIG-style bailout.

In the meantime, these companies have found a growing interest from military buyers for their tech, as AI becomes a new front for "national security" imperialist growth wars. From an email written by Microsoft employee Ibtihal Aboussad, who interrupted Microsoft AI CEO Mustafa Suleyman at a live event to call him a war profiteer: "When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to 'empower every human and organization to achieve more.' I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families. If I knew my work on transcription scenarios would help spy on and transcribe phone calls to better target Palestinians, I would not have joined this organization and contributed to genocide. I did not sign up to write code that violates human rights."

So there's a brief, non-exhaustive digest of some vectors for a critique of proprietary AI's role in the political economy. tl;dr: the first questions of material analysis are "who labors?" and "who profits/to whom does the value of that labor accrue?"

For further (and longer) reading, check out Justin Joque's Revolutionary Mathematics: Artificial Intelligence, Statistics and the Logic of Capitalism and Karen Hao's forthcoming Empire of AI.

25 notes

·

View notes

Text

btw did you know that taking photos will weaken your memory too -- people who take photos during an exhibition tour e.g. remember what an artwork's name was and where the artwork was situated in (~spatial memory/source memory) considerably less than people who didn't take any photos -- this holds for when photo taking time & screen interference (e.g. screenshot vs looking at a computer, c.f. taking out your camera to take a photo) is accounted for. also this effect is not specific to the content of materials, e.g. it can be class slides with text as well. there is a cognitive offloading account (bc there is something you /upload/ your memory to) as well as an attention disruption account (bc it's an extra task) to explain this phenomenon, which are competing hypotheses.

#called the photo taking effect. i guess it's folk wisdom as well - LIKE DUH! but the thing is we don't quite know whether it's offloading or#disruption that produces the effect - and the literature is mixed

10 notes

·

View notes

Text

I think the worst part about the LLMs as they stand now is that it's becoming clear that they cost too much to use for games, and that even if they didn't cost too much, they wouldn't quite be good enough.

It felt like there was so much potential for LLM judges in party games, for LLM NPCs who could bullshit with some convincing dialog. Needing to actually write dialog for tons and tons of characters is one of the main things that have been holding back so many games for so long.

Can you imagine The Sims if the sims were powered by the imaginary LLMs that don't exist (yet)? If there were controls stapled onto the generation tool that you could nudge or slam down?

Imagine if Inside Out were a multiplayer game, and you controlled someone from the inside with all these other players, calling up specific memories, altering mood, proposing plans of action, and then though some LLM technology that I must make very clear does not actually exist at high enough quality yet, you watched as your composite ideas and plans were played out?

I have long been a fan of interactive, collaborative storytelling, and ever since GPT-2 came around, it seemed like we were on the cusp of having some kind of revolution where all kinds of possibilities would open up. Games that would previously have required huge cognitive load, detail-tracking, and adjudication from a Game Master could have all that stuff offloaded.

And maybe we'll get that some day. Maybe there's someone working with the technology right now who's doing something interesting with it, taking this tool and transforming it into the thing of my dreams. Or maybe there will be some kind of revolutionary advancement of the kind that are supposed to be commonplace, and it'll start actually working at the level that I'd want it to work at in order to play a game with it.

23 notes

·

View notes

Text

How a lack of sleep affects us as a system

we originally posted this on reddit, this is a repost

As most probably know, a lack of sleep significantly impacts your mental health, regardless if you are a system or not. It can increasing the risk of mood disorders, anxiety, and depression, and potentially exacerbating existing mental health conditions. Sleep deprivation can also impair cognitive functions like decision-making, judgment, and problem-solving, further affecting emotional regulation and well-being.

That being said, we simply wanted to talk how a lack of bodily sleep affects us as a system..

Knowing that a lack of sleep can worsen symptoms you may already have/experience, we noticed that for us, on the nights where we get little to no sleep, we experience a lot of things by tenfolds. We dissociate more, communication between headmates becomes harder to accomplish, we tend to have such a harder time telling if we're switching or not (hard to tell who we even are atm) and even leads to a lot of emotional regulation issues, causing a lot of our other mental health issues to also get worse. It makes our depression get worsen. Episodes of derealization or depersonalization become more frequent. We are constantly on the verge of an anxiety attack by the littlest thing, and in general just makes us constantly unstable emotionally.

Three hours ago we isolated ourselves and cried ourself to sleep for a nap, even tho we rested for a bit, everything is still cranked up to an 8 emotionally/mentally.

As for a solution for this, we found that practicing proper sleep habits helps everyone within the system, and we recommend that even as a system, you practise healthy sleep habits.

(Do as we say not as we do)

Here's a few things we sometimes do that helps us get a goodnight's rest.

Set a shared sleep routine.

Agree as a system (as much as possible) on consistent sleep/wake times. Even if headmates have different preferences, aim for a compromise that supports the body's needs.

Carefully Choose Sleep-Fronting Headmates

Some systems designate specific headmates to front near bedtime—those who are calm, grounded, or good at helping the body rest. If you don't have a dedicated headmate, ask your fellow headmates who would be willing and comfortable with sharing the bed/body with you during bedtime that you know takes rest seriously. Rotate roles if needed, or assign "rest guardians" who make sure others aren't keeping the body awake. Don't be like me where I invited a headmate who lives off coffee to sleep in the body, because SHOCKINGLY sleeping does not get done. Pick your headmates wisely.

Practise Internal Quiet Time

It's basically like meditation. Set a designated quiet or low-activity period before sleep (30–60 minutes) and use grounding techniques, light inner world visualization, or calming internal conversation to reduce system-wide activity. Encourage all fronting or nearby headmates to focus on body sensations: deep breaths, muscle relaxation, or heartbeat awareness. Grounding helps anchor the system in the present and prepares the body for sleep.

Journaling Before Bed

Write down thoughts, switches, emotions, or tasks from different headmates. This helps offload mental clutter and reduce anxiety-driven insomnia.

Make Agreements on Late-Night Switching

If switching at night is common, agree on "quiet hours" or "no-switch unless necessary" rules, unless a headmate is taking over to help the body rest. This is something we are currently still working on as a system because MULTIPLE SOMEONES think it's okay to do whatever they want regardless of time..

External Aids

We find that external aids are the most important for us, mainly because it signals to everyone that it's time for rest. Weighted blankets, calming music, white noise, or specific scents (lavender, for example) may help soothe multiple headmates at once. If there is a specific activity that you as a collective or the frequent fronter like to do to unwind, this would be the time to do it. For us, we tend to do word searches before bed some nights, and have been considering doing it more often as of late as we notice it helps us relax a lot more than rewatching a youtube video.

We have no idea how to end this but I guess... what are some struggles do you deal with as a system when you lack sleep? What are some things you do to help with sleep as a system? We're curious in knowing how others tackle this problem too.

- Luminous

#pro endo#pro endogenic#plurality#plural system#actually plural#pluralgang#pluralpunk#plural community#anti endo fuck off

7 notes

·

View notes

Note

Hi Devon,

I'm a recent grad planning to apply to psych PhD programs in the fall with the plan to pursue a career academia (despite how much I know it'll suck I've thought long and hard about it and truly don't think I would be as fulfilled doing anything else). My research experience has been in cognitive development and I keep on being drawn to questions about autism. I am Autistic myself and pretty much think the way we have historically thought about cognitive abilities in autism is garbage. I want to pursue my questions but am honestly terrified about trying to fight my way through the current status quo in autism research.

You're one of very few people I know of in the realm of academia with views on autism that I actually agree with and respect, so I would love your thoughts. Is there hope for actually Autistic individuals pursuing research into autism? Are there any researchers who you've seen building community with Autistic people and listening to Autistic voices? Do you have any advice for surviving in the field as an Autistic person?

Anything you can say to these questions would be much appreciated, thank you!

I'm the type to be brutally honest rather than uplifting and encouraging, so you know, take that into account when adjusting for the skew of my answers.

Any time a person reaches out to me seeking advice on pursuing a graduate degree in psychology of any kind, I advise them against it for the most part. The field desperately needs more research conducted by Autistic people, for Autistic people (and other neurodivergent groups) but I have never known a graduate program to be anything but extremely abusive, exploitative, ableist, and ill-suited to preparing a graduate student today for the reality of academic life as it now is. These mfers are playing by a rulebook that was tired in the 1980s and its downright detached from reality today. My graduate experience was so traumatic and disillusioning that I chose to abandon academic research or any hope of having a tenure track career altogether. Everyone that I know was either completely abused and traumatized by their advisor, or pod personed by them and transformed into exactly the kind of passive aggressive liberal manipulative ghoul that had once mistreated them. Graduate study ravaged my health and my self-concept.

Is there hope for actually Autistic individuals pursuing research into Autism? Well, there is a growing body of research by us and for us. Journals like Autism in Adulthood do give me hope, and help nourish me intellectually and improve my work.

Are there any researchers whom I've seen building community with Autistic people and listening to Autistic voices? All the ones that I've seen actually operating in practice use methods of communication and workflows that are profoundly inaccessible and harmful to us, even if they are incredibly well intentioned and open to the idea of neurodiversity. There is a lot of decent research coming out these days finally, but I don't know how all of that sausage gets made.

Do I have any advice for surviving in the field as an Autistic person? Make sure you have a very robust support system that exists completely independently from academia. Make sure you have a complete and rich life that has nothing to do with academics and do not give up even a SHRED of it, even if it means accomplishing less and taking more time while you are in school. Have hobbies, friends and loved ones you see daily, a spiritual or physical practice that helps you offload stress, vacations or little adventures within your community that renew you, and work that is applied and grounded rather than just basic/theoretical research. (especially needed if you're in cognitive psych land. shit gets so fuckin abstact and divorced from reality).

Read a lot of fiction or practice some art or do something creative that has nothing to do with your graduate studies. Do not sign up for meaningless committees. Poster presentations do not matter and don't help your CV much at all. Most committees don't either. Read the book The Professor Is In and the blog that goes along with it religiously. Do not trust your advisor. Do not expect your dissertation to be perfect and do not make it your most ambitious project, focus on making it something you can get done quickly that is just "good enough." Cultivate skills that will be useful outside of academia. Do not assume you will ever get an academic job. Read the statistics on how many PhDs there are relative to how many professorships. Speak to people who work outside of academia who have the credentials you are getting. Know how to market yourself and get a job outside of academia if you have to -- consulting especially may be a good fit if you are Autistic and not suited for a 9 to 5 in an office.

Grill any potential advisor at any program you are considered for, hard. if they are defensive being asked questions about their working style, their leadership style, their former students, etc, that means they do not like ever being challenged and that is a red flag. Ask to speak to *FORMER* students. Not current ones. Current ones will not feel safe being honest. Ask for job placement data for graduates of their lab. Look up reviews. Do not pay for graduate school, only apply to fully funded programs otherwise they are scamming you. Remember you can leave at any time. good luck.

48 notes

·

View notes

Text

From a study on this impact of AI on critical thinking skills:

“The findings revealed a significant negative correlation between frequent AI tool usage and critical thinking abilities, mediated by increased cognitive offloading. Younger participants exhibited higher dependence on AI tools and lower critical thinking scores compared to older participants. Furthermore, higher educational attainment was associated with better critical thinking skills, regardless of AI usage. These results highlight the potential cognitive costs of AI tool reliance, emphasising the need for educational strategies that promote critical engagement with AI technologies… The findings underscore the importance of fostering critical thinking in an AI-driven world.”

7 notes

·

View notes

Text

witch from mercury genuinely reads like

Gund Format Cons

A weapon that kills its pilot will fundamentally be used by the rich forcing the poor to pilot them, because if there's one thing the rich love more than killing poor people in proxy wars, it's forcing disposable soldiers to do it.

The only way to offload the severe cognitive load of Gund Format tech is to literally throw corpses at it, producing clones of Newtypes and killing them repeatedly again and again until their consciousnesses are strong enough to offset the load of the machine. This mech is piloted by the ghosts of children, and they all are forced to kill.

Gund Format Pros

You can make a robot arm with it

it makes suletta super happy (canonically unhealthy attachment)

calibarn is cool (hard to refute)

So that's three reasons to two, meaning gund format is good actually. we did it, everyone. our series' ideology is solved

#“let's explore the ramifications of this tech that could never exist in real life and isn't analagous to real tech”#is my least favorite kind of sci-fi.#we've explored nothing about our own world#we've explore nothing about the reality in which we live#instead we've posited 'what if there was a civilian murder beam that ran on orphan blood. but that blood can make a mean prosthetic'#fuck.

29 notes

·

View notes